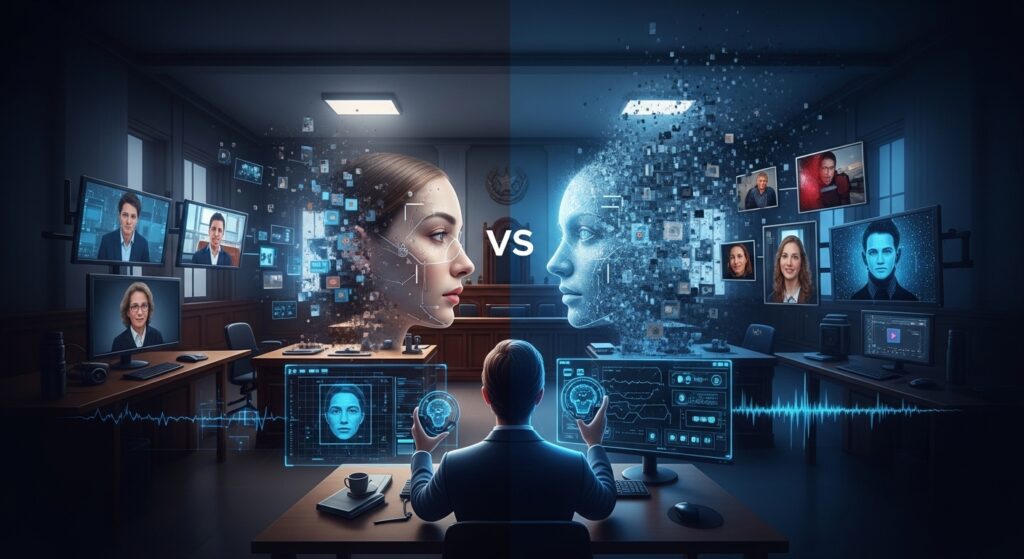

The world of digital evidence has changed faster than most courts, or attorneys, realize. Artificial intelligence is rewriting the rules of what’s real, and the legal system is struggling to catch up. Enter the age of deepfakes and synthetic media.

At Mako Forensics, we live in that gray zone where digital truth and synthetic fabrication collide. My background in law enforcement and forensic examination has taught me one thing above all: the truth doesn’t fear inspection.

But in the age of generative AI, “truth” now demands deeper inspection than ever before.

It used to take a Hollywood-level budget to convincingly fake a video or audio recording. Not anymore. With AI tools now accessible to anyone with a laptop, synthetic media, often referred to as Deepfakes (videos, audio, and images of people saying or doing things they never did) can be produced in just minutes.

Courts and attorneys are finding that these tools are evolving faster than the detection technologies meant to expose them. That’s creating a massive challenge in evidentiary reliability. Today’s question isn’t just “Has this file been altered?” It’s “Was this file ever real to begin with?”

One of my mentor (although he doesn’t know yet) is Hany Farid, an long-time media expert. He talks about synthetic media here if you care to learn more from experts.

In my forensic experience, AI-related evidence generally falls into two categories.

It’s that second category that keeps forensic professionals up at night. Because once the integrity of a single frame or syllable is in doubt, the credibility of the entire case can unravel.

For decades, the gold standard for authenticity was straightforward: under Federal Rule of Evidence 901, a party only had to show “evidence sufficient to support a finding that the item is what the proponent claims it is.”

That worked when we were dealing with analog photos and videotapes. But when an AI model can generate a “real-looking” recording from a few sample images or voice clips, that low threshold falls apart.

And here’s where the danger multiplies. Even genuine evidence is now being dismissed under the “liar’s dividend”; the idea that anyone can claim real footage is fake simply because deepfakes exist. That dynamic undermines the foundation of justice itself: confidence in what’s presented as truth.

We’re starting to see movement in the legal community.

For attorneys, insurers, and corporate clients, synthetic media changes how cases must be built. It’s no longer enough to have a video or audio file that “looks” real. You must know where it came from, how it was processed, and who touched it along the way.

That’s where digital forensics plays a critical role. At Mako Forensics, our process includes:

If your firm or company handles digital evidence, consider these steps:

We’ve entered a time when “seeing is believing” has lost its meaning. For the courts, for litigators, and for the forensic community, authenticity is the new battlefield.

At Mako Forensics, our mission is simple: to uncover the truth within the noise. Whether it’s a disputed video in a trucking accident case, a questionable social-media clip in civil litigation, or a manipulated image threatening someone’s reputation; our job is to separate what’s real from what’s not.

In the end, that’s what justice demands.

If you want to read more about the dangers of synthetic media and how it could effect the direction of public opinion, just read it here.