- Unnatural lighting, strange shadows, or odd reflections in images or video.

- Repeated patterns, uncanny symmetry, unnatural facial expressions in images or videos.

- Metadata missing, inconsistent timestamps, or metadata that suggests the file has been heavily re-compressed.

- Reverse image search shows little or no historical evidence of the image’s prior existence.

However, none of these automatically prove “fake by AI.” They only raise questions. That’s where forensic validation becomes necessary.

3. The forensic workflow: How we validate media

When your client brings a piece of media to us, here’s how we typically proceed:

- Acquisition: Capture a forensically sound copy of the original file (or as close to original as possible). Pay attention to chain of custody and preservation of metadata.

- Metadata & provenance review: Examine embedded metadata, hash values, creation timestamps, editing history. Check for anomalies in file structure.

- Content credentials and industry standards: We leverage frameworks such as Coalition for Content Provenance and Authenticity (C2PA) standards and tools like Content Authenticity Initiative (CAI) for validating creator and process metadata.

- Technical analysis: Use forensically tested tools that can detect traces of generative AI (for example embedding-based signatures, perceptual hashing, inconsistency in encoding artifacts). Academic research shows that machine-learning models can now differentiate real from AI-generated images with high accuracy.

- Document findings and expert reporting: Provide a detailed report your attorney or insurer can rely upon, explaining methodology, findings, limitations and conclusions.

4. Why this matters in distracted driving / litigation

In your arena, helping attorneys and insurers with cases such as distracted driving, vehicle collisions, insurance fraud or corporate liability, media authenticity becomes critical:

- Imagine a driver claims the dash-cam did not record their distraction; you have a video file that supposedly shows they were attentive. If that file’s metadata is altered, or the file could be AI-generated or manipulated, the defense can challenge it.

- Or consider an insurer who is sent a “surveillance” photo of a customer allegedly at a beach during claims investigation. If that image is AI-generated or heavily manipulated the liability changes.

- As artificial-intelligence tools become more accessible and cheaper, the risk of manipulated or synthetic media being used in legal, insurance or corporate settings increases rapidly. Your clients will want an expert they can trust to sort truth from fabrication.

5. How to choose the right forensic partner

When selecting a firm to authenticate media, attorneys or insurers should ask:

- Does the examiner have certified experience in digital media forensics and a proven track record in litigation settings?

- Is the workflow documented and forensically sound including chain of custody, acquisition, hash validation, verifiable metadata analysis?

- Does the report clearly describe methods, tools, limitations and opinions that are defensible in court or arbitration?

- Does the firm stay ahead of generative AI trends, for example, awareness of AI-watermarking, deep-fake detection, embedding methods?

At Mako Forensics, I bring over two decades in law enforcement and cyber forensic investigation.

My transition into private-sector forensic analysis means I understand both the investigative mindset of enforcement and the evidentiary expectations of legal/insurance contexts.

6. Final thoughts; why transparency equals trust

In a world of increasing media manipulation the single most important value is trust.

Clients must trust that the media they present is credible, that a court or insurer will accept it, and that the story behind it (the “why”) holds up.

When you engage a firm that approaches the work not just as a “what” (“Here is a video and I say it’s real”) but as a “why” (“Here is the full provenance, here is how we verified it, here is why it holds up”) you elevate your position.

When evidence matters, credibility matters.

And in the age of AI-generated media your credibility needs to rest on firm forensic foundations.

If you’re dealing with any question of media authenticity, you don’t want guesswork. You want an expert.

If you, or your clients, need help validating a piece of media, reach out.

When “Is it real?” really matters: How to authenticate media in the age of AI

In my years in law enforcement and digital forensics I’ve learned a simple truth: evidence only matters if you can trust it.

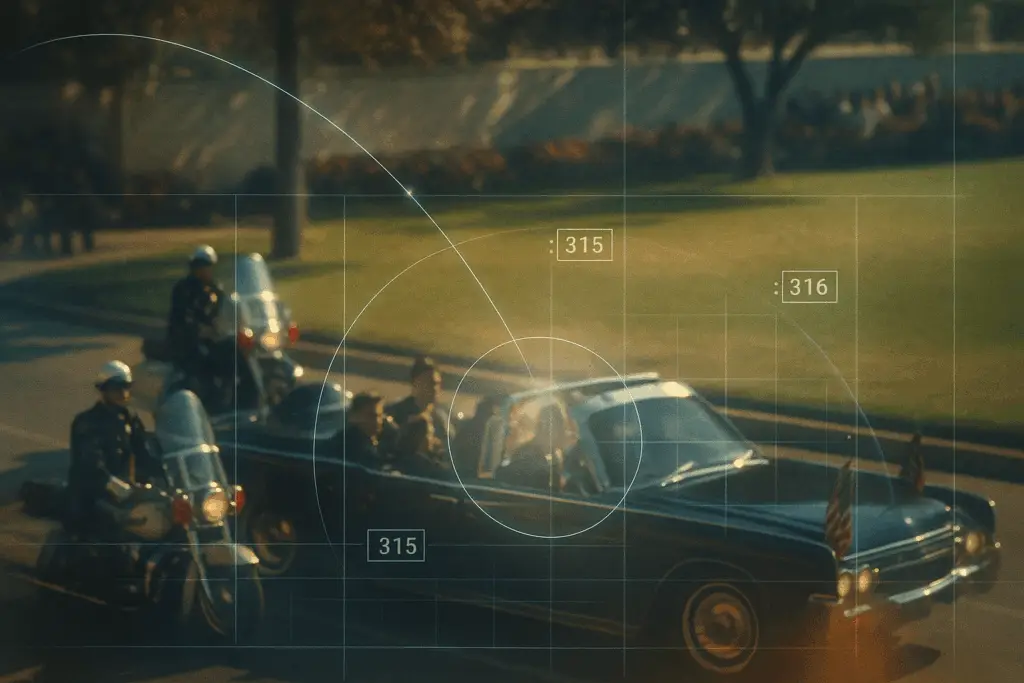

It could be a phone video capturing a crash, a vehicle’s dashcam recording showing distracted driving, or a “surveillance” image someone has sent you; the integrity of the media can make or break a case.

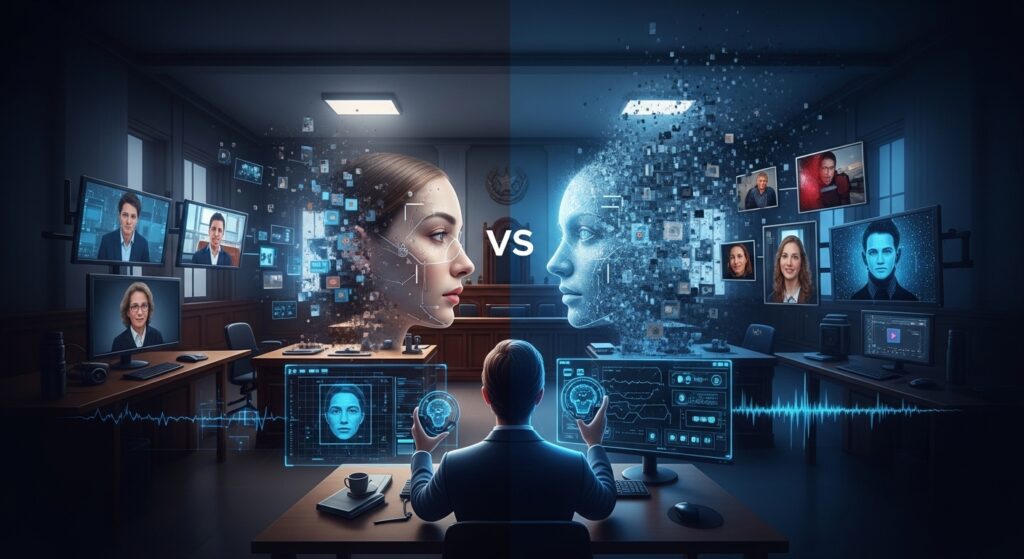

Today we face a new challenge: content not just being manipulated, but wholly generated by artificial intelligence.

So the question becomes: how do you know the media is real? How do you know it hasn’t been AI-generated or altered in a way that undermines its value? Plainly put… is it freakin’ real or not?

Here’s a practical breakdown of what to look for, how the process works, and how your client (or you) should proceed when this kind of forensic work becomes necessary.

1. Understand why provenance and verification matter

Authenticating media isn’t just about “does this file look fake?” It’s about tracing the origin and history of the content. Who created it? When and how? Has it been edited, compressed, manipulated, or re-encoded?

These questions fall under the concept of provenance.

Verification is the subsequent step: using technical tools and forensic methods to assess that provenance and confirm integrity.

When your legal matter hinges on media that could be challenged, you need an expert who can analyze both.

At Mako Forensics we emphasize the “why” behind the evidence: your client deserves to know that a video or image they rely on isn’t vulnerable to attack because its origin or chain of custody is weak.

2. Early red-flags you can look for

You don’t need to be a forensic scientist to spot the obvious things that might indicate AI-generation or manipulation. Some warning signs:

- Unnatural lighting, strange shadows, or odd reflections in images or video.

- Repeated patterns, uncanny symmetry, unnatural facial expressions in images or videos.

- Metadata missing, inconsistent timestamps, or metadata that suggests the file has been heavily re-compressed.

- Reverse image search shows little or no historical evidence of the image’s prior existence.

However, none of these automatically prove “fake by AI.” They only raise questions. That’s where forensic validation becomes necessary.

3. The forensic workflow: How we validate media

When your client brings a piece of media to us, here’s how we typically proceed:

- Acquisition: Capture a forensically sound copy of the original file (or as close to original as possible). Pay attention to chain of custody and preservation of metadata.

- Metadata & provenance review: Examine embedded metadata, hash values, creation timestamps, editing history. Check for anomalies in file structure.

- Content credentials and industry standards: We leverage frameworks such as Coalition for Content Provenance and Authenticity (C2PA) standards and tools like Content Authenticity Initiative (CAI) for validating creator and process metadata.

- Technical analysis: Use forensically tested tools that can detect traces of generative AI (for example embedding-based signatures, perceptual hashing, inconsistency in encoding artifacts). Academic research shows that machine-learning models can now differentiate real from AI-generated images with high accuracy.

- Document findings and expert reporting: Provide a detailed report your attorney or insurer can rely upon, explaining methodology, findings, limitations and conclusions.

4. Why this matters in distracted driving / litigation

In your arena, helping attorneys and insurers with cases such as distracted driving, vehicle collisions, insurance fraud or corporate liability, media authenticity becomes critical:

- Imagine a driver claims the dash-cam did not record their distraction; you have a video file that supposedly shows they were attentive. If that file’s metadata is altered, or the file could be AI-generated or manipulated, the defense can challenge it.

- Or consider an insurer who is sent a “surveillance” photo of a customer allegedly at a beach during claims investigation. If that image is AI-generated or heavily manipulated the liability changes.

- As artificial-intelligence tools become more accessible and cheaper, the risk of manipulated or synthetic media being used in legal, insurance or corporate settings increases rapidly. Your clients will want an expert they can trust to sort truth from fabrication.

5. How to choose the right forensic partner

When selecting a firm to authenticate media, attorneys or insurers should ask:

- Does the examiner have certified experience in digital media forensics and a proven track record in litigation settings?

- Is the workflow documented and forensically sound including chain of custody, acquisition, hash validation, verifiable metadata analysis?

- Does the report clearly describe methods, tools, limitations and opinions that are defensible in court or arbitration?

- Does the firm stay ahead of generative AI trends, for example, awareness of AI-watermarking, deep-fake detection, embedding methods?

At Mako Forensics, I bring over two decades in law enforcement and cyber forensic investigation.

My transition into private-sector forensic analysis means I understand both the investigative mindset of enforcement and the evidentiary expectations of legal/insurance contexts.

6. Final thoughts; why transparency equals trust

In a world of increasing media manipulation the single most important value is trust.

Clients must trust that the media they present is credible, that a court or insurer will accept it, and that the story behind it (the “why”) holds up.

When you engage a firm that approaches the work not just as a “what” (“Here is a video and I say it’s real”) but as a “why” (“Here is the full provenance, here is how we verified it, here is why it holds up”) you elevate your position.

When evidence matters, credibility matters.

And in the age of AI-generated media your credibility needs to rest on firm forensic foundations.

If you’re dealing with any question of media authenticity, you don’t want guesswork. You want an expert.

If you, or your clients, need help validating a piece of media, reach out.