Let’s face it, trucking accidents are a mess; both at the time of the crash and after.

When a semi-truck barrels into a car on the highway, the immediate questions are usually the same.

Was the driver distracted? Was fatigue a factor? Did the trucking company push them past their legal driving hours?

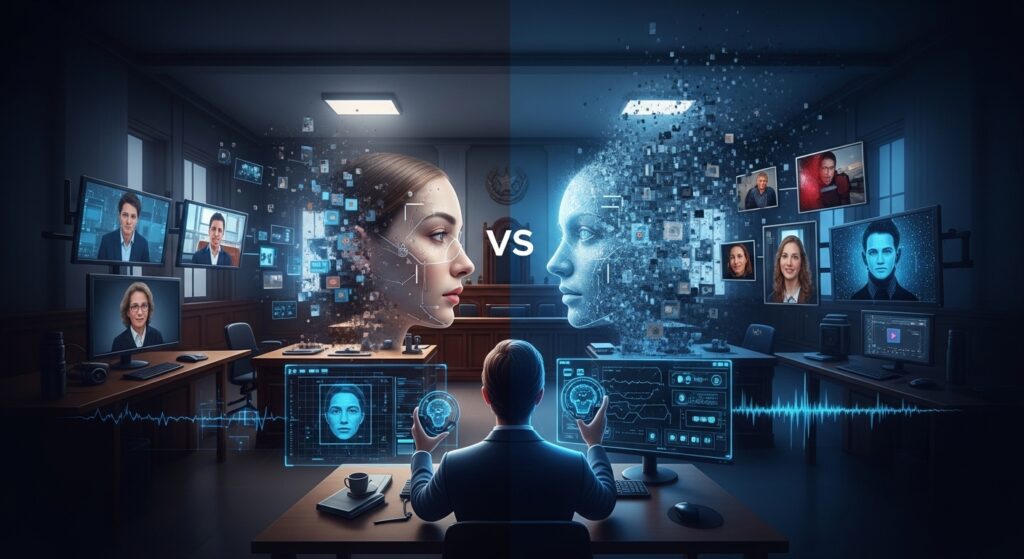

But there’s a new layer to these cases that most attorneys haven’t fully tapped into yet; driver monitoring systems.

These AI-powered, in-cab cameras meant to track truckers and ensure they’re staying alert are quickly (but quietly) becoming one of the most important pieces of evidence in trucking litigation.

And here’s the kicker… drivers are finding ways to trick them.

Manipulating The System

It’s no secret that trucking companies have started using AI-powered driver monitoring systems like Lytx, Samsara, and Motive.

These systems are supposed to reduce accidents by tracking eye movement, head position, and even hand placement on the wheel.

If a driver starts nodding off or looking at their phone, the system flags it. Some even issue warning sounds and can even alert the company in real time.

But here’s where things get interesting. Some truckers don’t like “Big Brother” watching over them, so they’ve started fighting back by tampering with the same systems meant to hold them accountable.

Tricks Of The Trade

Some tricks drivers use are low-tech… a simple piece of tape or a small sticker over the camera lens can block its view without triggering an alert.

Others are more sophisticated, like using infrared lights that scramble the sensor or even hacking into the truck’s software to disable the system.

Here’s the most concerning trend… some drivers have learned to game the system entirely by positioning their phone or tablet just out of view while driving. So while the monitoring system may show them with both hands on the wheel, in reality, they’re watching TikTok or responding to texts.

So, this is where digital forensics becomes most important.

If you’re handling a trucking accident case and you suspect the driver wasn’t paying attention, you can’t just take their word for it. You need evidence!

Preserve The Evidence Quickly

A proper forensic analysis can uncover whether the driver was using their phone at the time of the crash, but it goes beyond that.

The monitoring system data needs to be preserved as soon as possible after an incident occurs. Doing this will ensure the most accurate and complete overall picture of events that led to the incident. And let’s not forget, these monitoring systems don’t store data forever.

Many companies overwrite footage and logs after a set period of time. If you don’t act fast to preserve that data, it could be gone before you ever get the chance to see it.

Collecting this data may be as simple as logging into a central, web-based interface and downloading the data even without needing access to the truck itself. Or preserving the data might involve more intricate access to the vehicle and monitoring system, possibly even removing the device to perform physical extraction of log data.

A deep dive into the truck’s system logs might reveal missing or altered data gaps in the monitoring system’s footage, logs showing unauthorized access, or even evidence that the device was disabled leading up to the wreck.

Turning A Blind Eye

What’s even more damning is when companies know this kind of tampering is happening and turn a blind eye.

If a trucking company knew (or should have known) that their drivers were disabling safety systems and they did nothing to stop it, that’s a serious liability issue.

That’s the kind of evidence that can turn a standard negligence case into something much bigger.

Forensic Advantage

We’re entering a new phase of trucking accident litigation, one where digital manipulation is going to play a much bigger role than ever before.

Attorneys who understand how to leverage digital forensics in these cases are going to have a serious advantage over those who don’t.

The black box used to be the gold standard in trucking cases; now, it’s just one piece of the puzzle.

AI-driven monitoring systems hold the real truth if you know where to look.

If you’re working on a case where you suspect distracted driving, system tampering, or anything else that doesn’t add up, let’s talk.

The evidence is there. It just takes the right tools and expertise to uncover it.